Optimizing the Resource Usage of Actor-based Systems with Reinforcement Learning

15 September 2021

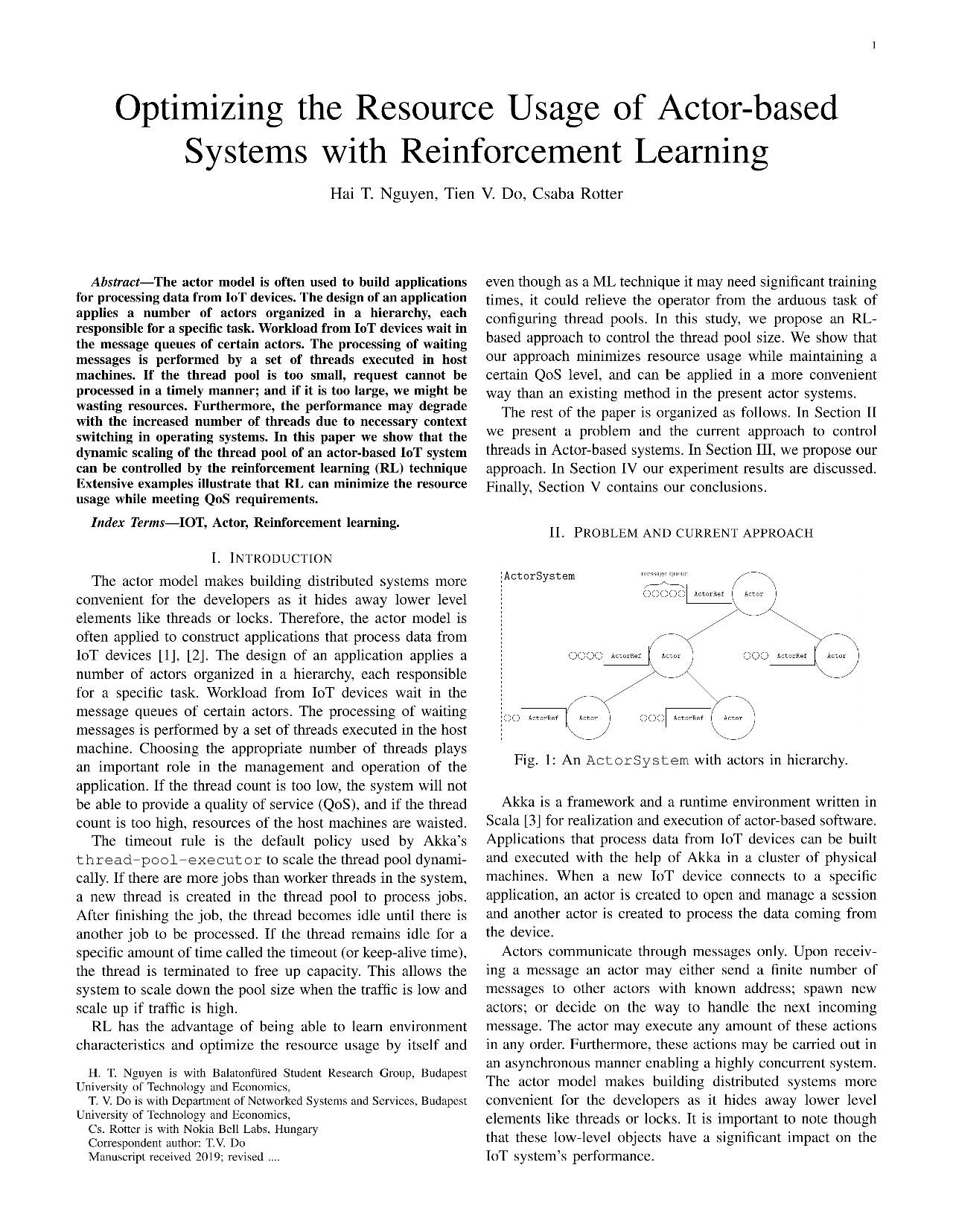

The actor model is often used to build applications for processing data from IoT devices. The design of an application applies a number of actors organized in a hierarchy, each responsible for a specific task. Workload from IoT devices wait in the message queues of certain actors. The processing of waiting messages is performed by a set of threads executed in host machines. If the thread pool is too small, request cannot be processed in a timely manner; and if it is too large, we might be wasting resources. Furthermore, the performance may degrade with the increased number of threads due to necessary context switching in operating systems. In this paper we show that the dynamic scaling of the thread pool of an actor-based IoT system can be controlled by the reinforcement learning (RL) technique Extensive examples illustrate that RL can minimize the resource usage while meeting QoS requirements.